The latest release of the Visual Basic Upgrade Companion improves the support for moving from VB6.0's On Error Statements to C# structured error handling, using try/catch blocks. In this post I will cover a couple of examples on how this transformation is performed.

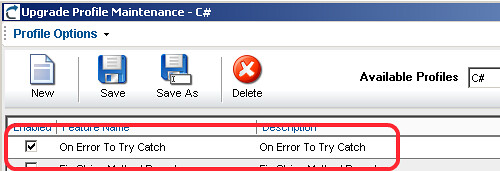

First of, this is something that you may not need/want in your application, so this is a features managed through the Migration Profile of the application. In order to enable it, in the Profile, make sure the "On Error To Try Catch" feature is enabled.

Now let's get started with the examples.

On Error Resume Next

First of, let's cover one of the most frustrating statements to migrate from VB6.0: the dreaded On Error Resume Next. This statement tells VB6.0 to basically ignore errors and continue execution no matter what. VB6.0 can recover from some errors, so an application can continue without being affected. These same errors, however, can cause an exception in .NET, or may leave the application in an inconsistent state.

Now let's look at a code example in VB6.0:

Private Sub bttnOK_Click()

On Error Resume Next

MsgBox ("Assume this line throws an error")

End Sub

The VBUC would then leave it as follows in C#:

private void bttnOK_Click( Object eventSender, EventArgs eventArgs)

{

//UPGRADE_TODO: (1069) Error handling statement

(On Error Resume Next) was converted to a complex pattern which

might not be equivalent to the original.

try

{

MessageBox.Show("Assume this line throws an error",

Application.ProductName);

}

catch (Exception exc)

{

throw new Exception(

"Migration Exception: The

following exception could be handled in a different

way after the conversion: " + exc.Message);

}

}

Because of this, the decision was made to wrap the code that is in the scope of the On Error Resume Next statement on a try/catch block. This is likely the way it would be implement in a "native" .NET way, as there is no real equivalent functionality to tell C# to ignore errors and continue. Also, the VBUC adds a comment (an UPGRADE_TODO), so the developer can review the scenario and make a judgement call on wether to leave it as it is, or change it in some way. Most of the time, the try/catch block can be limited to just one line of code, but that modification requires some manual intervention. Still, it is easier when there is something already there.

:-)

On Error GoTo <label>

The other common scenario is to have a more structured approach to error handling. This can be illustrated with the following code snippet:

Private Sub bttnCancel_Click()

On Error GoTo errorHandler

MsgBox ("Assume this line throws an error")

exitSub:

Exit Sub

errorHandler:

MsgBox ("An error was caught")

GoTo exitSub

End Sub

Since this code is using a pattern that is very similar to what the try/catch statement would do, the VBUC is able to identify the pattern and move it to the appropriate try/catch block:

private void bttnCancel_Click( Object eventSender, EventArgs eventArgs)

{

try

{

MessageBox.Show("Assume this line throws an error", Application.ProductName);

}

catch

{

MessageBox.Show("An error was caught", Application.ProductName);

}

}

As you can see, the functionality of this type of pattern is replicated completely, maintaining complete functional equivalence with the VB6.0 code.

Overall, the support for converting On Error statements from VB6.0 into the proper structured error handling structures in C# has come a long way. It is now very robust and supports the most commonly used patterns. So, unless you are using some strange spaghetti code or have a very peculiar way of doing things, the VBUC will be able to translate most scenarios without issues. Some of them, as mentioned, may still required human intervention, but let's face it - using On Error Resume Next shouldn't really be allowed in any programming language!! ;-)

Ok Ok. I must admitted I have a weird taste to configure my gui. But recently I took it to a the extreme as I (don't know how) delete my File menu.

I managed to get around this thing for a few weeks but finally I fed up. So this gentleman gave me a solution (reset my settings) http://weblogs.asp.net/hosamkamel/archive/2007/10/03/reset-visual-studio-2005-settings.aspx

It’s well known that financial institutions are under a lot

of pressure to replace their core legacy systems, and here at ArtinSoft we’ve seen an increased interest

from this industry towards our migration services and products, specially our Visual Basic Upgrade

Companion tool and our VB to

.NET upgrade services. In fact,

during the last year or so we’ve helped lots of these institutions move their

business critical applications to newer platforms, accounting for millions of

lines of code successfully migrated at low risk, cost and time.

Margin pressures and shrinking IT budgets have always been a

considerable factor for this sector, with financial institutions constantly

looking for a way to produce more with less. Some studies show that most of

them allocate around 80% of their budgets maintaining their current IT

infrastructure, much of which comprised by legacy applications.

Competition has also acted as another driver for legacy

modernization, with organizations actively looking for a competitive advantage

in a globalized world. Legacy applications, like other intangible assets, are

hard to emulate by competitors, so they represent key differentiators and a source

of competitive advantage. Typically, significant investments in intellectual

capital have been implanted in the legacy systems over the years (information

about services, customers, operations, processes, etc.), constituting the

back-bone of many companies.

In the past, they approached modernization in an incremental

way, but recent compliance and security developments have drastically impacted

financial institutions. In order to comply with new regulations, they are

forced to quickly upgrade their valuable legacy

software assets. Industry analysts estimate that between 20-30% of a bank's

base budget is spent on compliance demands, so they are urgently seeking for

ways to reduce this cost so that they can invest in more strategic projects.

However, many institutions manually rewrite their legacy

applications, a disruptive method that consumes a lot of resources, and normally

causes loss of business knowledge embedded in these systems. Hence the pain and

mixed results that Bank Technology News’ Editor in Chief, Holly Sraeel,

describes on her article “From

Pain to Gain With Core Banking Swap Outs”. “Most players concede that such

a move (core banking replacement) is desirable and considered more strategic

today than in years past. So why don’t more banks take up the cause? It’s still

a painful—and expensive—process, with no guarantees”, she notes. “The

replacement of such a system (…) represents the most complex, risky and

expensive IT project an institution can undertake. Still, the payoff can far

exceed the risks associated with replacement projects, particularly if one

factors in the greater efficiency, access to information and ability to add

applications.”

That’s when the concept of a proven automated legacy

migration solution emerges as the most viable and cost-effective path towards

compliance, preserving the business knowledge present in these assets, enhancing

their functionality afterwards, and avoiding the technological obsolescence

dead-end trap. Even more when this is no longer optional due to today’s tighter

regulations. As Logica’s William Morgan clearly states on the interview

I mentioned on my previous post,

“compliance regimes in Financial Services can often dictate it an unacceptable

operational risk to run critical applications on unsupported software”.

“These applications are becoming a real risk and some are

increasingly costly to maintain. Regulators are uncomfortable about

unsupported critical applications. Migrating into the .NET platform, either to VB.NET

or C# contains the issue. Clients are keen to move to new technologies in the

simplest and most cost effective way so that their teams can quickly focus on

developments in newer technologies and build teams with up to date skills”, he

ads, referring specifically to VB6 to .NET migrations.

So, as I mentioned before, ArtinSoft has a lot of experience in large

scale critical migration projects, and in the last year we’ve provided

compliance relief for the financial sector. With advanced automated migration

tools you can license, or expert consulting services and a growing partner

network through which you can outsource the whole project on a fixed time and

cost basis, we can definitely help you move your core systems to the latest

platforms.

We are reviewing some programming languages which provide explicit support for the integration between OO, ADTs (i.e. rules and pattern-matching). We refer to Part 1 for an introduction. We currently focus on Scala and TOM in our work. Scala provides the notion of extractor as a way to pattern-matching extensibility. Extractors allow defining views of classes such that we can see them in the form of patterns. Extractors behave like adapters that construct and destruct objects as required during pattern-matching according to a protocol assumed in Scala. By this way, it results possible to separate a pattern from the kind of objects the pattern denotes. We might consider pattern-matching as an aspect of an object (in the sense of Aspect Oriented Programming, AOP). The idea behind is really interesting, it is clarified in more detail in Matching Objects in Scala . We want to perform some analysis in this post. Our main concern in this part, as expected, is pattern extensibility, we leave the ADT aside just for a while. We will consider a situation where more support for extensibility is required and propose using delegation to cope with it, without leaving the elegant idea and scope of extractors offered by Scala. Pattern-matching by delegation could be more directly supported by the language.

Let us now see the example. Because of the complete integration between Scala and Java, extractors in Scala nicely interact with proper Java classes, too. However, let us use just proper Scala class declarations in our case study, as an illustration. In this hypothetical case, we deal with a model of “beings” (“persons”, “robots”, societies” and the like. We want to write a very simple classifier of beings using pattern-matching. We assume that classes of model are sealed for the classifier (we are not allowed to modify them). Hence, patterns need to be attached externally. We start with some classes and assume more classes can be given, so we need to extend our initial classifier to cope with new kinds of beings.

Thus, initially the model is given by the following declarations (we only have “person” and “society” as kinds of beings):

trait Being

class Person extends Being {

var name:String = _

var age:Int = _

def getName() = name

def getAge() = age

def this(name:String, age:Int){this();this.name=name;this.age=age}

override def toString() = "Person(" + name+ ", " +age+")"

}

class Society extends Being{

var members:Array[Being] = _

def getMembers = members

def this(members:Array[Being]){this();this.members=members}

override def toString() = "Society"

}

We have written classes in a Java-style, to remark that could be external to Scala. Now, let us introduce a first classifier. We encapsulate all parts in a class for our particular purposes, as we explain later on.

trait BeingClassifier{

def classify(p:Being) = println("Being classified(-):" + p)

}

class BasicClassifier extends BeingClassifier{

object Person {

def apply(name:String, age:int)= new Person(name, age)

def unapply(p:Person) = Some(p.getName)

}

object Society{

def apply(members:Array[Being]) = new Society(members)

def unapply(s:Society) = Some(s.getMembers)

}

override def classify(b:Being) = b match {

case Person(n) => println("Person classified(+):" + n)

case Society(m) => println("Society classified(+):" + b)

case _ => super.classify(b)

}

}

object SomeBeingClassifier extends BasicClassifier{

}

Method “classify(b:Being)”, based on pattern-matching, tries to say whether “b” could or not be recognized and displays a message, correspondingly. Notice that objects “Person” and “Society” within class “BasicClassifier” are extractors. In each case, we have specified both “apply” and “unapply” methods of the protocol, however, just “unapply” is actually relevant here. Such methods uses destructors to extract those parts of interest for the pattern being defined. For instance, in this case, for a “Person” instance we just take its field “name” in the destruction (“age” is ignored). Thus, the pattern with shape “Person(n:String)” differs from any constructor declared in class Person. That is, pattern and constructor can be, in that sense, independent expressions in Scala, which is a nice feature.

Now, let us suppose we know about further classes “Fetus” and “Robot” deriving from “Being”, defined as follows:

class Fetus(name:String) extends Person(name,0)

{

override def toString() = "Fetus"

}

class Robot extends Being{

var model:String = _

def getModel = model

def this(model:String){this();this.model=model}

override def toString() = "Robot("+model+")"

}

Suppose we use the following object for testing our model:

object testing extends Application {

val CL = SomeBeingClassifier

val p = new Person("john",20)

val r = new Robot("R2D2")

val f = new Fetus("baby")

val s = new Society(Array(p, r, f))

CL.classify(p)

CL.classify(f)

CL.classify(r)

CL.classify(s)

}

We would get:

Person classified(+):john

Person classified(+):baby

Being classified(-):Robot(R2D2)

Society classified(+):Society

In this case, we observe that “Robot” instances are negatively classified while “Fetus” instances are recognized as “Person” objects. Now, we want to extend our classification rule to handle these new classes. Notice, however, we do not have direct support in Scala for achieving that, unfortunately. A reason is that “object”-elements are final (instances) and consequently they can not be extended. In our implementation, we would need to create a new classifier extending the “BasicClassifier” above, straightforwardly. However, we notice that the procedure we follow is systematic and amenable to get automated.

class ExtendedClassifier extends BasicClassifier{

object Fetus {

def apply(name:String) = new Fetus(name)

def unapply(p:Fetus) = Some(p.getName())

}

object Robot {

def apply(model:String) = new Robot(model)

def unapply(r:Robot) = Some(r.getModel)

}

override def classify(p:Being) = p match {

case Robot(n) => println("Robot classified(+):" + n)

case Fetus(n) => println("Fetus classified(+):" + n)

case _ => super.classify(p)

}

}

object SomeExtendedClassifier extends ExtendedClassifier{

}

As expected, “ExtendedClassifier” delegates parent “BasicClassifier” performing further classifications that are not contemplated by its own classify method. If we now change our testing object:

object testing extends Application {

val XCL = SomeExtendedClassifier

val p = new Person("john",20)

val r = new Robot("R2D2")

val f = new Fetus("baby")

val s = new Society(Array(p, r, f))

XCL.classify(p)

XCL.classify(f)

XCL.classify(r)

XCL.classify(s)

}

We would get the correct classification:

Person classified(+):john

Fetus classified(+):baby

Robot classified(+):R2D2

Society classified(+):Society

As already mentioned, this procedure could be more directly supported by the language, such that a higher level of extensibility could be offered.

Today Eric Nelson posted on one of his blogs a short interview

with Roberto Leiton, ArtinSoft’s CEO.

Eric works for Microsoft UK,

mostly helping local ISV’s explore and adopt the latest technologies and tools.

In fact, that’s why he first contacted us over a year ago, while doing some research

on VB to .NET migration

options for a large ISV in the UK.

Since then, we’ve been in touch with Eric, helping some of his ISV’s move off

VB6, and he’s been providing very useful Visual

Basic to .NET upgrade resources through his blogs.

So click here

for the full interview, where Eric and Roberto talk about experiences and

findings around VB6 to .NET migrations, and make sure to browse through Eric’s

blog and find “regular postings on the

latest .NET technologies, interop and migration strategies and more”,

including another interview

with William Morgan of Logica, one of our partners in the UK.

Abstract (or algebraic) Data Types (ADTs) are well-known modeling techniques for specification of data structures in an algebraic style.

Such specifications result useful because they allow formal reasoning on Software at the specification level. That opens the possibility of automated verification and code generation for instance as we may find within the field of program transformation (refactoring, modernization, etc.). However, ADTs are more naturally associated with a declarative programming languages (functional (FP) and logic programming (LP)) style than an object-oriented programming one (OOP). A typical ADT for a tree data structure could be as follows using some hypothetical formalism (we simplify the model to keep things simple):

adt TreeADT<Data>{

import Integer, Boolean, String;

signature:

sort Tree;

oper Empty : -> Tree;

oper Node : Data, Tree, Tree|-> Tree;

oper isEmpty, isNode : Tree-> Boolean;

oper mirror : Tree -> Tree

oper toString : -> String

semantics:

isEmpty(Empty()) = true;

isEmpty(Node(_,_,_)) = false;

mirror(Empty()) = Empty()

mirror(Node(i, l, r)) = Node(i, mirror(r), mirror(l))

}

As we see in this example, ADTs strongly exploit the notion of rules and pattern-matching based programming as a concise way to specify requirements for functionality. The signature part is the interface of the type (also called sort in this jargon) by means of operators; the semantics part indicates which equalities are invariant over operations for every implementation of this specification. Semantics allows recognizing some special kinds of operations. For instance, Empty and Node are free, in the sense that no equation reduces them. They are called constructors.

If we read equations from left to right, we can consider them declarations of functions where the formals are allowed to be complex structures. We also use wildcards (‘_’) to discard those parts of the parameters which are irrelevant in context. It is evident that a normal declaration without using patterns would probably require more code and consequently obscure the original intention expressed by the ADT. In general, we would need destructors (getters), that is, functions to access ADT parts. Hence, operation mirror might look like as follows:

Tree mirror(Tree t){

if(t.isEmpty()) return t;

return new Node( t.getInfo(),

t.getRight().mirror(),

t.getLeft().mirror() );

}

Thus, some clarity usually could get lost when generating code OOP from ADTs: among other things, because navigation (term destruction, decomposition) needs to be explicit in the implementation.

Rules and pattern-matching has not yet been standard in main stream OOP languages with respect to processing general data objects. It has been quite common the use of regular expressions over strings as well as pattern-matching over semi structured data especially querying and transforming XML data. Such processing tends to occur via APIs and external tools outside of the proper language. Hence, the need for rules does exist in very practical applications, thus, during the last years several proposals have been developed to extend OOP languages with rules and pattern-matching. Some interesting attempts to integrate ADTs with OOP which are worthy to mention are Tom (http://tom.loria.fr/ ) and Scala (http://www.scala-lang.org/ ). An interesting place to look at with quite illustrating comparative examples is http://langexplr.blogspot.com/.

We will start in this blog a sequence of posts studying these two models of integration of rules, pattern-matching and OOP in some detail. We first start with a possible version of the ADT above using Scala. In this case the code generation has to be done manually by the implementer. But the language for modeling and implementing is the same which can be advantageous. Scala explicit support (generalized) ADTs, so we practically obtain a direct translation.

object TreeADT{

trait Tree{

def isEmpty:boolean = false

override def toString() = ""

def mirror : Tree = this

def height : int = 0

}

case class Node(info:Any, left:Tree, right:Tree)

extends Tree{

override def toString() =

"("+info+left.toString() + right.toString()+")"

}

object Empty extends Tree{

override def isEmpty = true

}

def isEmpty(t : Tree) : boolean =

t match {

case Empty => true

case _ => false

}

def mirror(t : Tree):Tree =

t match{

case Empty => t

case Node(i, l, r) => Node(i,mirror(r), mirror(l))

}

}

This code is so handy that I'm posting it just to remember. I preffer to serialize my datasets as attributes instead of elements. And its just a matter of using a setting. See:

Dim cnPubs As New SqlConnection("Data Source=<servername>;user id=<username>;" & _

"password=<password>;Initial Catalog=Pubs;")

Dim daAuthors As New SqlDataAdapter("Select * from Authors", cnPubs)

Dim ds As New DataSet()

cnPubs.Open()

daAuthors.Fill(ds, "Authors")

Dim dc As DataColumn

For Each dc In ds.Tables("Authors").Columns

dc.ColumnMapping = MappingType.Attribute

Next

ds.WriteXml("c:\Authors.xml")

Console.WriteLine("Completed writing XML file, using a DataSet")

Console.Read()

The find in files options of the IDE is part of my daily bread tasks. I use it all day long to get to the darkest corners of my code and kill some horrible bugs.

But from time to time it happens that the find in files functionality stops working. It just starts as always but shows and anoying "No files found..." and i really irritated me because the files where there!!!! !@#$!@#$!@#$

Well finally a fix for this annoyance is (seriously is not a joke, don't question the dark forces):

1. Spin your chair 3 times for Visual Studio 2003 and 4 times for Visual Studio 2005

2. In Visual Studio 2003 press CTRL SCROLL-LOCK and in Visual Studion 2005 press CTRL and BREAK.

3. Dont laugh, this is serious! It really works.

How did ArtinSoft got into producing Aggiorno (www.aggiorno.com )? Well after more than 15 years in the software migration market we learned a few things and we are convinced that developers want to increase their productivity and that automatic programming is a very good mean to do just that.

Aggiorno is the latest incarnation of ArtinSoft proven automatic source code manipulation techniques. This time their are aimed at web developers.

Aggiorno, in its first release, offers a set of key automated improvements for web pages:

- Search engine indexing optimization

- User accessibility

- Error free, web standards compliance

- Cascading Style Sheet standard styling

- Site content and design separation

Aggiorno's unique value proposition is the encapsulation of source code improvements, utterly focused on web developer productivity in order to quickly and easily extend business reach.

At Microsoft TechEd in Orlando this tuesday June 3rd 2008 we announced the availability of Beta2 and we have included all the suggestion from our Beta1.

Download the Aggiorno Beta2 now and let us know what you think.

As mentioned previously, the migration process is now an ally of every company while attempting to get their software systems revamped. It’s imperative to determine the rules to measure the process throughput, in order to compare all the options the market offers for this purpose, but, how it comes to be described the rules to compare a process where every single vendor employs proprietary technology that contrast from one to another?

After eye-witness the whole process, the ideas impressed in the user’s mind will decide the judgment made to some specified migration tool, and how it performs; but to make sure this judgment will be fair, here are some concepts, ideas and guidelines about how the migration process should be done, and the most important, how it should be measured.

<!--[if !supportLists]-->· <!--[endif]-->Time:

Human efforts are precious; computer efforts are arbitrary, disposable and reusable. An automated process can be repeated as many times as necessary, as long as their design considerations allow the algorithms to accept all the possible input values. Migration processes can be done with straight one-on-one transformation rules resulting in poorly mapped items that will need small adjustments, but regardless of the size of those efforts, those must be human, so these single reckless rules may become hundreds of human hours to fix all this small issues; remember, we are dealing with large enterprise software products, meaning that a single peaceable imperfection can replicate million times. Another possible scenario will be complex rules that searches for patterns and complex structures to generate equivalent patterns on the other side, but as many AI tasks, it may take lots of computer efforts, because of the immense and boundless set of calculations needed to analyze the original rules and synthesize new constructions. For the sake of performance, the user must identify which resources are most valuable, the time spent by people fixing what the tool’s output provided; or computers time that will be employed by more complex migration tools to generate more human-like code.

<!--[if !supportLists]-->· <!--[endif]-->Translation equivalence:

Legacy applications were built using the code standards and conventions for the moment, the patterns and strategies used in the past have evolved ones for good other to became obsolete. During an automated software migration process there must be a way to adapt arcade techniques to newer ones; a simple one-on-one translation will generate the same input pattern and the resulting source code will not take advantage of all the new features on the target platform. A brilliant migration tool should detect legacy patterns, analyze its usage and look for a new pattern in the target platform that behaves the same way. Because of the time calculations explained previously, a faster tool will only mean non-detailed and superficial transformations that will be a poor replica of the original code or in the best scenario a code wrapper will fix all the damage done. Functional equivalence is the key to a successful migration, because the whole concept of software migration is not only about getting the software running in the target platform, it’s about adaptation to a new set of capabilities and the actual usage of those capabilities.

With that on mind, a comparison between different tools can be clearer now. Leaving aside the competitiveness of the market, the readers should identify the facts from the prevaricated marketing slogans, and appraise the resources to be spent during a migration process. Saving a couple of days of computer time may become hundreds of human hours, which at the end will not cure the faulty core, will just make it run.

Before starting any migration from Visual Basic 6.0 to the .NET Framework (either VB.NET or C#), we always recommend that our clients perform at least a couple of pre-migration tasks that help streamline a potential migration process. Here is a brief summary of some of them:

- Code Cleanup: Sometime we run into applications that have modules that haven't been used in a while, and nobody really knows if they are still in use. Going through a Code Cleanup stage greatly helps reduce the amount of code that needs to be migrated, and avoids wasting effort in deprecated parts

- Code Advisor: It is also recommended that you execute Microsoft's Code Advisor for Visual Basic 6.0. This Visual Studio plug-in analyzes your VB6 code and points out programming patterns that present a challenge for the migration to .NET. Some of these patterns are automatically resolved by the VBUC, but even then, you should always use this rule of thumb: if you use high quality VB6 code as input for the VBUC, you get high-quality .NET code as output. Here is a brief example of the suggestion you'll get from the Code Advisor:

- Arrays that are not based on a zero index: Should be fixed in VB6

- Use of #If: Only the part that evaluates to "true" will be converted. This may cause problems after the conversion

- Use Option Explicit and Late binding, declare X with earlybound types: The VBUC is able to deduce the data types of most variables, but if you have an opportunity to fix this in VB6, you should take advantage of it

- Trim, UCase, Left, Right and Mid String functions: The VBUC is able to detect the data type being used and interpret the function (for example, Trim()), as a correct string function (in the example, Trim$()). It still recommended that you fix it before performing the migration.

There are other tasks that may be necessary depending on the project and client, like developing test cases, training the developers on the .NET Framework, preparing the environment, and countless other specific requirements. But if you are considering a .NET migration, you should at the very least take into consideration performing the tasks mentioned above.

Enterprise software is going beyond the line in matters of size and scalability; small companies depend on custom tailored software to manage their business rules, and large enterprises with onsite engineers, deal in a daily basis with the challenge to keep their systems up to date and running with the top edge technology.

In both cases the investment made in software systems to assist a given business is elevated, regardless if it was purchased from another company or if it was built and maintained by the own, it’s never going to stop being critical to update the current systems and platforms.

Any enterprise software owner/designer/programmer must be aware of the market tendencies of operating systems, web technologies, hardware specs, and software patterns and brands; because of the raging nature of the IT industry it takes an eye blink to get obsolete.

Let’s recap about VB6 to VB.NET era, a transition with a lot of new technology, specs and a lot of new capabilities that promise the programmers to take their applications where it seems to be previously impossible like web services and remote facilities, numerous data providers are accessible with a common interface, and more wonders were presented with the .NET framework, however all this features can get very difficult or near to impossible to get incorporated in legacy applications. At this moment it was mandatory to get that software translated to the new architecture.

Initially the idea was to redesign the entire system using those new features in a natural way but this implicates to consume large amounts of resources and human efforts to recreate every single module, class, form, etc. This process results in a completely new application running over new technology that needs to be tested in the final environment, and that will impact the production performance because it has to be tested in the real business challenges. At the end, we got a new application attempting to copycat the behavior of the old programs and huge amount of resources spent.

Since this practice is exhaustive for the technical resources and for the production metrics, the computer scientists research about the functionally equivalent automated processes were used to create software that is capable to port one application from a given source platform to a different, and possibly upgraded one. During this translation process, the main objective is to use as much inherent constructions as possible in the newly generated code to take advantages of the target technology and to avoid the usage of legacy components. In case that the objective is to include a new feature found in the target platform, the application can be migrated and then the feature can be included more naturally than building communication subprograms to make that new capability to get in touch with the old technology.

This process is widely promising because it grants the creation of a new system based on the previous one, using minimum human efforts by establishing transformation rules to take the source constructions and generate equivalent constructions in the desired technology. Nevertheless, this will require human input, especially in very abstract constructions and user defined items.

All the comparisons done before to measure the benefits between redesign and migration, points to identify the second practice as the most cost-effective and fast, but now another metric becomes crucial. The automated stage is done by computers using proprietary technology depending on the vendor of the migration software, but how extensive the manual changes will be? Or, how hard will be to translate the non-migrated constructions?

The quality metrics of the final product will be redefined because a properly designed application will be translated with the same design considerations. This means that a given application will be migrated keeping the main aspects of design and the only changes in the resulting source code will be minor improvements in some language constructions and patterns. This makes the new quality metrics to be: maximize the automation ratio, minimize the amount of manual work needed, generate more maintainable code and reach the testing stage faster.

Aggiorno is an add-in for Visual Studio 2005/2008 that can swallow horrible non-validating markup and help you make an ASP.NET site web standards compliant with little effort. With Aggiorno web developers can improve their ASP.NET or HTML sites by making them comply with the latest web standards and incorporating the latest technology trends. This will immediately mean increased productivity and immediate business value.

Beta 1 has just been released, so you might want to give it a try and send some suggestions to the development team.

Last March I attended the Microsoft Inner Circle conference in Bellevue, WA, in which they presented the 2008 version of Visual Studio Team System. This was my first view of VSTS, and I was impressed by some of the features they included to help developers in tasks such as gathering statistics on their source code, writing and executing unit tests, and looking for potential performance bottlenecks.

We were also introduced to Visual Studio Testing Edition, which contains a set of tools to help QA teams automate tasks such as tracking and managing stabilization processes, controlling code churn and performing load tests.

From a tester’s or developer’s perspective, I have no doubt that VSTS is going to be a great tool; however, I think it still needs to extend its support for Project Management tasks, as well as database design and requirements elicitation, documentation and tracking. We were told that many of these features will be included in the next version of VSTS, code named “Rosario”.

More information on VSTS can be found at http://msdn.microsoft.com/en-us/teamsystem/default.aspx

Here is an excerpt from an article that Greg DeMichillie wrote on Directions on Microsoft April Edition:

"The planned follow-on release to Windows Vista, code-named Windows 7, will not include the Visual Basic 6.0 (VB 6) runtime libraries, Microsoft has begun informing customers. This sets a timeframe for the final end of support for the runtime."

As we have informed on several occasion in this Blog, Microsoft is performing all the normal steps to retire a technology from market. Visual Basic 6 was/is a tremendously popular technology but never the less it will have to go away.

Jarvis Coffin once said: "All technologies fade away, but they don’t die." This is most probably what is going to happen to VB6 (hey.. we still have COBOL code written more than 30 years ago that is alive and kicking!!!) but the question I have for you is: will you embrace the new technology? Or will you fade away with it?

It is time to upgrade your skills as a developer and also to migrate your application to greener grounds.

ArtinSoft has been hugely successful at migrating customers as Eric Nelson (Microsoft UK DPE and blogger) recently mentioned: "Artinsoft have a lot of VB6 migration experience and can help you do the migration - either by licensing their VB Upgrade Companion or by taking advantage of their migration services. Artinsoft are doing some great work with some of my UK ISVs helping them move off VB6."

If you have any questions or comments regarding your migration strategy let's cover them in this blog.

UPDATE March 11th 2009: The title of this post was: "VB Runtime NOT in next Windows". However, Microsoft has recently updated the support policy for Visual Basic 6 Runtime. The new policy states that the VB runtime is now supported for the full lifecycle of Windows 7.

PS: You can read the inflammatory comments I got over the past week below!

We found and interesting bug during a migration. The issue was that when there was an iteration through the controls in the forms, and you set the Enabled property, the property didn't get set.

After some research my friend Olman found this workaroung

foreach(Control c in Controls)

{

ctrl.Enabled = true;

if (ctrl is AxHost) ((AxHost)ctrl).Enabled = true;

}

Do you want to create a program to install your assembly in the GAC using C#. Well if you had that requirement or you are just curious, here is how.

I read these three articles:

Demystifying the .NET Global Assembly Cache

GAC API Interface

Undocumented Fusion

What I wanted just a straight answer of how to do it. Well here is how:

using System;

using System.Collections.Generic;

using System.Text;

using System.GAC;

//// Artinsoft

//// Author: Mauricio Rojas orellabac@gmail.com mrojas@artinsoft.com

//// This program uses the undocumented GAC API to perform a simple install of an assembly in the GAC

namespace AddAssemblyToGAC

{ class Program

{ static void Main(string[] args)

{ // Create an FUSION_INSTALL_REFERENCE struct and fill it with data

FUSION_INSTALL_REFERENCE[] installReference = new FUSION_INSTALL_REFERENCE[1];

installReference[0].dwFlags = 0;

// Using opaque scheme here

installReference[0].guidScheme = System.GAC.AssemblyCache.FUSION_REFCOUNT_OPAQUE_STRING_GUID;

installReference[0].szIdentifier = "My Pretty Aplication Identifier";

installReference[0].szNonCannonicalData= "My other info";

// Get an IAssemblyCache interface

IAssemblyCache pCache = AssemblyCache.CreateAssemblyCache();

String AssemblyFilePath = args[0];

if (!System.IO.File.Exists(AssemblyFilePath))

{ Console.WriteLine("Hey! Please use a valid path to an assembly, assembly was not found!"); }

int result = pCache.InstallAssembly(0, AssemblyFilePath,installReference);

//NOTE recently someone reported a problem with this code and I tried this:

// int result = pCache.InstallAssembly(0, AssemblyFilePath,null); and it worked. I think is a marshalling issue I will probably review it later

Console.WriteLine("Process returned " + result); Console.WriteLine("Done!");

}

}

}

And here's the complete source code for this application: DOWNLOAD SOURCE CODE AND BINARIES

The date has arrived Visual Basic 6 leaves Extended support today.

Rob Helm recently wrote on "Directions on Microsoft": "Some organizations will let support lapse on the VB6 development environment, gambling that any serious problems in the VB6 environment has already been discovered" Additionally, Rob adds: "... organizations remaining loyal to VB6 applications will have to make increasingly heroic efforts to keep those applications running as their IT environments change."

Organizations that GAMBLE with their business continuity, IT professionals that need to make HEROIC efforts to keep applications running! Don't you believe that maintaining an IT organization supporting a business is already enough of an effort to add to the mix unsupported applications?

Do you plan to be a GAMBLING HERO or is it about time to consider ways out of Visual Basic 6?

Well this might be just the right time. ArtinSoft is about to release a new version of the Visual Basic Upgrade companion. The effort required to migrate has been reduced even further and it now makes more sense than ever to automatically upgrade your applications to C# or VB.NET.

Have you been procrastinating the decision to move? Act now!!

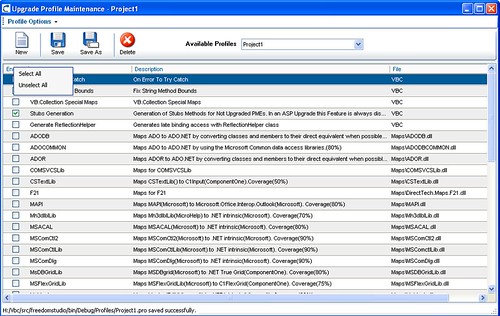

One of the nicest features of the Visual Basic Upgrade Companion version 2.0 is the support for Migration Profiles. These profiles give you control over which translations to use for a particular migration, improving the quality of the generated code and applying only the conversions desired (for example, for specific third party components). Profiles are managed through the Profile Maintenance screen, as shown here:

If look at the screen in some detail, you will see some of the features included with the tool. Here you can select, for example, to convert the MAPI (Mail API) library to the Microsoft.Office.Interop.Outlook component. And it shows that this particular conversion has a coverage of 80%. This particular library shows why Migration Profiles are important. If the application you are migrating is an end-user application that is locally installed in the user's desktop inside your enterprise, and MS Outlook is part of your standard installation on these desktops, then it should save you quite a bit of work to let the VBUC perform this transformation. If, however, it is a server-side application where MS Office is very rarely installed, then having this option selected will only cause you additional work later on, as you will have to go through the code and remove the references.

Other good examples are the ADODB and ADOCOMMON plug-ins. Both of them convert code that uses ADO into ADO.NET. How they do it, however, is completely different. The first plug-in, ADODB, converts ADO into ADO.NET using the SqlClient libraries, so it only works with SQL Server. The ADOCOMMON plug-in, however, generates code that uses the interfaces introduced with version 2.0 of the .NET Framework in the System.Data.Common namespace. This allows the application to connect to any database using an ADO.NET data provider that complies with this interfaces - most major database vendors now have providers that meet that specification. So you can use either transformation depending on your target database!

The previous two examples illustrate the importance of Migration Profiles. As you can see in the screenshot above, the tool currently has a large amount of plug-in with specific transformations. This number is expected to grow over time. I will be covering some of these plug-ins in later posts. Stay tuned!

I mentioned

a few days ago that ArtinSoft was about to release a new version of its VB to

.NET migration product. Well, today it is official: finally, the Visual Basic Upgrade Companion

v2.0 has been released! After an incredible amount of work

(kudos to everyone involved), the tool now includes a series of enhancements

basically aimed towards increasing the generated code quality and the overall

automation of the upgrade process, allowing to reduce even further the manual

effort required to convert and compile your VB6 code to VB.NET or C#.

These are just some of the new features and improvements

included in this version of ArtinSoft’s Visual Basic Upgrade Companion (VBUC):

-

Upgrade Manager: The VBUC v2.0 now sports a

dashing GUI and Command Line interface that improves the user experience. Incorporating

a screen to set up migration profiles, it allows selecting and applying only

those features you require for a specific conversion.

-

Support for conversion of multi-project

applications.

-

Migration of mixed ASP and VB6 code to ASP.NET

and VB.Net or C#.

-

Individual conversion patterns designed to

improve the migration of a large amount of diverse specific language patterns.

-

Conversion of unstructured to structured code, reducing

the presence of spaghetti code and improving code maintainability and

evolution. This includes the

transformation of frequently used patterns related to error handling. For

example, the VBUC recognizes “On Error Goto” and “On Error Resume Next”

constructs patterns and replace them with the .NET “Try-Catch” structure. It

also performs “Goto” removals.

-

Improved typing system, which provides

additional information to assign more accurate target types to existing VB6 variables,

functions, fields, parameters, etc.

-

Improved Array migration, such as conversion of

non-zero-based arrays and re-dimensioning of arrays.

-

C# specific generation enhancements:

declarations and typing, events declaration and invocation, error handling,

conversion of modules to classes, indexer properties, case sensitive

corrections, brackets generation for array access, variable initialization

generation, and much more.

-

Migration of ADO to ADO.NET using .NET Common Interfaces.

-

Support for converting many new 3rd-party

components such as SSDataWidgets SSDBGrid, TrueDBList80, Janus Grid, TX Text

Control, ActiveToolBars, ActiveTabs, ActiveBar 1 and 2, etc.

-

New helper classes that offer frequently used

VB6 functionality that is either unavailable in .NET and/or that is encapsulated

for the sake of readability.

-

Automatic stub generation of no-maps as a proven

strategy to manage overall remaining effort towards successful compilation of upgraded

code.

-

And much more!

For more info, you can visit ArtinSoft’s website, and let us know about your specific

migration requirements.